This years announcements at WWDC were presented at their fastest pace yet, making it easy to miss some of the most important changes coming to your devices in September. This article will focus on Siri in iOS 13, and more specifically iOS 13.1 since some features were pushed back. Don't worry though, all the features will be available to the public shortly after the new iPhones drop. This is a brief overview, as I plan on more specific how to write ups in the future surrounding Shortcuts and other updates.

Siri's Voice

The nerds in the room know that when Apple acquired Siri from SRI, her voice was an amalgamation of a voice actress and computer voice generation. This all changes this fall since Siri's voice will now be fully generated from an advanced Machine Learning (ML) algorithm. This allows a voice that can constantly evolve to become more natural sounding, and increase the placement of Siri into more places, since the code base should be smaller, in theory. A more natural sounding voice assistant takes away some of the friction of talking to a computer, and will open up the boundaries to a more conversational assistant.

Siri Intelligence

Siri increasingly has a wider presence across iOS with every update, not just the voice, but other actions as well. This is called "Siri Intelligence" and drives systems like quick type suggestions, caller ID guessing, and suggested calendar inputs. Of course these systems are not really "Siri" in a sense, but because these are actions associated with what a personal assistant would accomplish, Apple attributes it to Siri. What ties them together is Apple's work to group all of iOS actions together in the background. Simple marketing? Sure, but lets not argue with what they want to call their ML.

With iOS 13, it will do even more to be proactive.If a friend sends you a link in iMessage, then you open Safari, your favorites page will have the link at the bottom of the page as a "suggested from imessage" section. Maps will surface recent searches or show you locations referenced in mail or messages. And now Siri will detect and suggest reminders you see in other apps. This is all done privately and locally of course, so no need to worry about your data being snooped. These little conveniences across the OS makes you want to go back to first party apps, because they are so well optimized to work with these features. Let's hope developers have some way to tie relevant data back to Siri as well, other than shortcuts of course.

Shortcuts

What was previously only available on the App Store, Shortcuts will now be a pre-installed app, and take a front position around the OS. Formerly workflow, Shortcuts has gone though some changes since its initial release under the new Apple moniker. System actions, automation, and share sheet tie ins are now the bedrock of the system, and will be facing every user, savvy or not. Suggestions for new shortcut actions appear throughout searches and menus, and the deep menu in settings for managing "donated" shortcuts is now moved to the gallery. No more need to differentiate between siri shortcuts, donated shortcuts, and in app shortcuts; its just Shortcuts in this release.  The new iconography and colors for Shortcuts stand out in Dark Mode On top of this, actions have been blown wide open to third party developers. Previously you would need a hacky URL scheme or clipboard manipulation to pass data from one shortcut or intent to another. Now developers can develop their app shortcuts to receive input from a step in the shortcut itself. This means you can more easily string shortcut actions together, and makes it much more user approachable to even the most green novice. Let's not forget that experts who live in the app day to day can donate their shortcuts via a link generated in app, so no matter your skill level, you are only a click away from a complex automation that will improve your life.

The new iconography and colors for Shortcuts stand out in Dark Mode On top of this, actions have been blown wide open to third party developers. Previously you would need a hacky URL scheme or clipboard manipulation to pass data from one shortcut or intent to another. Now developers can develop their app shortcuts to receive input from a step in the shortcut itself. This means you can more easily string shortcut actions together, and makes it much more user approachable to even the most green novice. Let's not forget that experts who live in the app day to day can donate their shortcuts via a link generated in app, so no matter your skill level, you are only a click away from a complex automation that will improve your life.

Automation

Shortcuts now contain the home app automations, and includes a new automation category called "personal." Shortcuts and HomeKit scenes can now be chained together and triggered by different events like time of day, location, and even an app opening. Apple has also opened up the NFC chip on newer model iPhones, XS and XR only for now, which allows a user to designate a specific NFC chip to trigger a specific action when tapped. HomeKit speakers are also included in this ability so now you can play specific playlists when certain conditions are met. These actions can be chained together in various complex ways, and in future write ups I plan on covering some of my favorite automations.

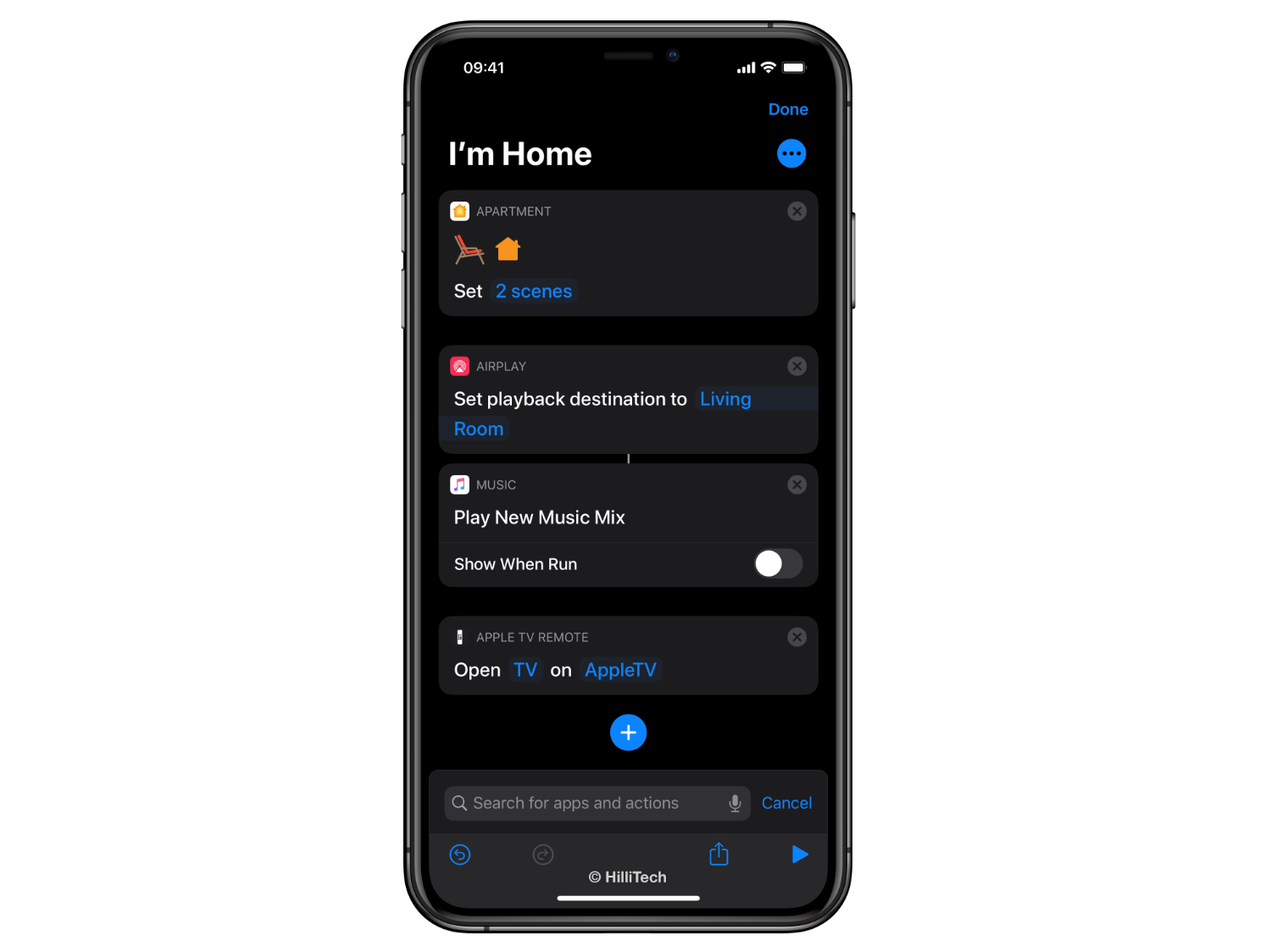

Chaining all of this together a user could:

- set home scene that turns the lights on and sets the temperature of their home

- play a certain song or playlist on a specific airplay 2 device

- text a loved one you have arrived, but only if the loved one is not home

- turn on your Apple TV

- launch a specific app on your TV

All with the one condition that you've arrived home during a certain time frame.  The simplified shortcuts interface with this easy to do shortcut As of my writing this, my printer got an update to its app with siri shortcut support, so excuse me while I make a shortcut that will make it print "YOUR FIRED" in big letters like in 'Back to the Future'.

The simplified shortcuts interface with this easy to do shortcut As of my writing this, my printer got an update to its app with siri shortcut support, so excuse me while I make a shortcut that will make it print "YOUR FIRED" in big letters like in 'Back to the Future'.

Other Bits

A new Indian accented English voice, multiple user support on HomePod, and shortcuts that can be conversational are coming as well, but are not yet implemented. We will have to wait for September to test the HomePod updates, since Apple does not have any beta for its OS. I'm testing a few apps with the new shortcuts systems and will be rebuilding all of my shortcuts from scratch to see how I can simplify them for the new updates.

Thanks for reading, and let me know on Twitter if you have any questions about Siri or shortcuts, I will help as best as I can. I also recommend giving @mattcassinelli a follow as well, since he is the go to expert on automation with Siri.